Wealthfront is a leading robo-advisor in the fintech domain offering investment related services to its clients. To enable rapid experimentation capability in our product, Wealthfront has overhauled the current event tracking system and moved it from on-prem to AWS cloud. Wealthfront uses event data generated by user actions on web, iOS and Android to provide better product experiences. Tracked event data enables use cases in the following areas:

- Marketing – understanding the effectiveness of acquisition channels and better targeting

- A/B Testing – testing the adoption of new features in different segments

- User Retention Analysis – behavior that predicts churn

In this blog post we are going to explain the high level design of our new event tracking system and the subsystems involved.

Before we dive into the design, let’s first understand the current problems of our existing system.

Current Pain Points

- Data Quality – We had a lot of trouble understanding the definition of events using our existing system. The same semantic events when sent from different clients like iOS, Android and Web looked quite different. The Data Science and Product Management team had to do a lot of customization in the downstream pipelines to reconcile the definitions and make sense of the data.

- No Single Source of Truth – Since multiple teams are involved in logging these events, their definitions are deeply embedded within respective code repos. This makes it difficult for data engineering and product teams to understand the event definitions and make sense of the output tables.

- Scalability – The existing system was designed with occasional data loss being acceptable. Newer analyses and models will not allow us to lose data and the system has to perform at a much higher scale.

To solve for Data Quality and No Single Source of Truth of event definitions, we decided to go with a Structured Event Data Model. To solve for Scalability, we built a new Data ingestion and processing framework using AWS cloud infrastructure.

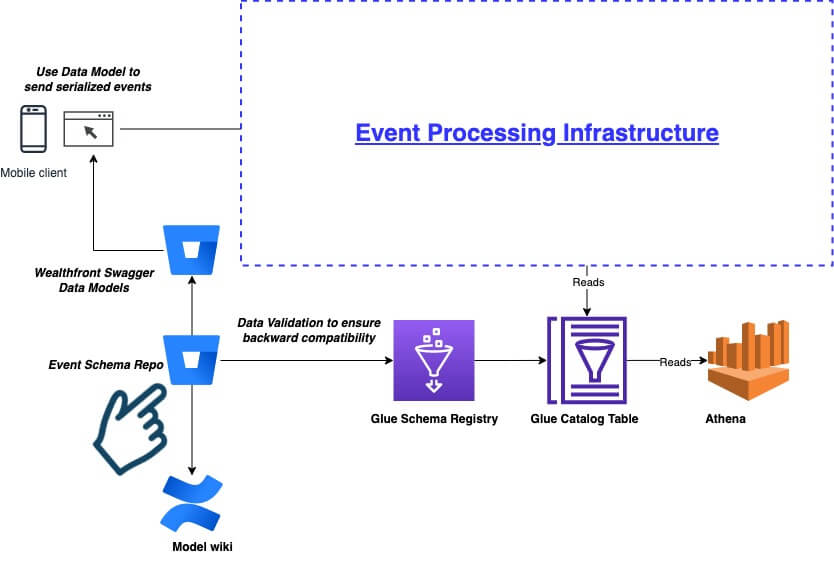

Event Data Model Framework

Event Data Model Component

Schema Definition

We chose Avro for the event definition schema as it has good community support and is also used extensively in our data platform. We have added new behavior to our Maven build process by using custom annotations in the Avro model like @glue and @swagger to sync definitions with Glue Catalog (AWS Glue Catalog is a technical metadata service that allows various AWS services to discover and interoperate based on metadata) and and for generating client side Swagger API code for web and mobile platforms (Swagger, now Open API is an implementation for REST API specification).

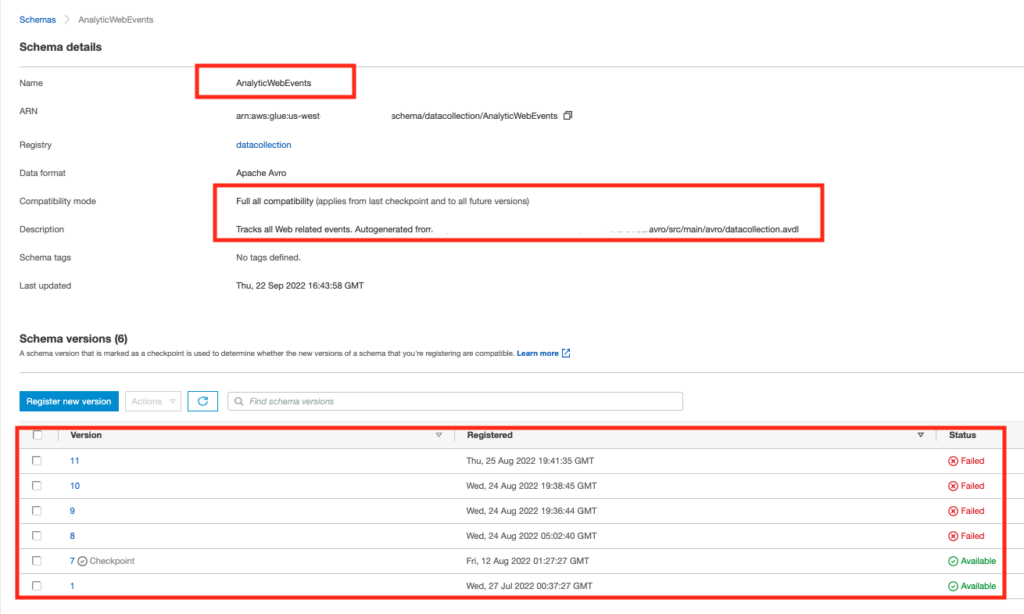

Schema Validation

To make sure event schema evolves in a backward compatible way, we use AWS Glue Schema registry to validate our event definition. The Avro event data model is then used to update the Athena table (AWS Athena is a SQL engine that allows us to view data in a tabular format based on a schema) definition in the Glue Catalog. The compatibility mode for Schema is set to FULL_ALL to allow consumers to read data written by producers using all previous schema versions. The two main reasons for doing that are:

- In the case of mobile clients , it is very likely we receive late arriving events because of slow or zero internet connectivity issues with the edge devices. These late arriving events would conform to an older schema

- Since the event logging implementation happens in sprint cycles not in locked steps with the event model evolution there are always instances of events being tracked with older model based definitions

Therefore we have to choose the compatibility mode which allows us to read older events using the new model.

Client Model

The Swagger annotation on the Avro event model generates the Swagger 2.0 spec which is then used to generate client side code. Swagger Codegen parses an OpenAPI/Swagger Definition defined in JSON/YAML and generates code using Mustache templates. Here is an earlier Wealthfront blog post which touches upon this topic.

Event Processing Infrastructure

This is a high level design of the system to capture and process the events received from the edge devices to our Data Platform. The main subsystems involved are API Gateway, Kinesis Data Stream, Kinesis Firehose and AWS Lambda.

API Gateway

We have language specific client side libraries which make POST requests to the API Gateway with structured event payload serialized in JSON. We use JSON serialization to send the payload from client to API Gateway. Finally, the data is binary serialized and stored in our data platform S3. We use Lambda native integration to write the payload to Kinesis Streams.

Kinesis Streams

Kinesis Streams is configured to store events for past 7 days, which helps us to reprocess data in case of any Lambda failures

Kinesis Firehose

Kinesis Firehose writes the Kinesis Stream data into S3 every 5 minutes (or until 128 MiB data volume is reached) and uses Glue Table information to conform the data to the event schema and write the data in Parquet format (Parquet is a column-oriented data file format for efficient storage and retrieval) for downstream consumption.

AWS Lambda

We have configured two Lambdas for each platform (Web/iOS/Android) to process events in the Kinesis stream. The first Lambda processes these events in batch and incase of failure split the batch into half until it successfully processes the event or narrows down on the failed events. The failed event is then logged to SQS and the first Lambda continues to process the stream. The second Lambda reads the SQS message for failed events and reattempts processing the event and in case of failure it is sent to the dead letter queue and Oncall is alerted using pager duty for investigation. Upon fixing the Lambda code, the dead letter queue messages are redirected to SQS for re-processing. We use the custom checkpointing feature of AWS Lambda to avoid duplicate processing of events during batch split.

Monitoring and Alerting

The health of the system is monitored using Cloudwatch alarms and all the state changes of the alarms are pushed to Amazon Event Bus (It is an AWS Service which receives events from a variety of sources like cloudwatch alarm and matches them against event rules). The event rule has been configured to detect all Cloudwatch alarm state changes with SNS topic sets as target. The monitoring lambda listens to the state change events in SNS and notifies the Oncall engineer via PagerDuty for further troubleshooting. Having an event bridge lets us detect state changes and enable us to resolve pager duty alarms automatically once the alarm transitions to OK state.

Conclusion

In this blog post, we have shown how with an event model and a new AWS processing infrastructure we have vastly improved event data capture and processing. The Structured event model had a significant impact on the overall data quality of the event streams. The new AWS processing infrastructure is highly scalable and performant and vastly superior to our existing processing infrastructure.

References

Amazon Athena is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL. Athena is serverless, so there is no infrastructure to manage, and you pay only for the queries that you run.

AWS Glue is a serverless data integration service that makes it easier to discover, prepare, move, and integrate data from multiple sources for analytics, machine learning (ML), and application development.

AWS Glue Schema Registry is a new feature that allows you to centrally discover, control, and evolve data stream schemas.

AWS Lambda is a serverless, event-driven compute service that lets you run code for virtually any type of application or backend service without provisioning or managing servers.

Amazon API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale.

Amazon Kinesis Data Streams is a serverless streaming data service that makes it easy to capture, process, and store data streams at any scale.

Amazon Kinesis Data Firehose is an extract, transform, and load (ETL) service that reliably captures, transforms, and delivers streaming data to data lakes, data stores, and analytics services.

Amazon Simple Notification Service (SNS) sends notifications two ways, A2A and A2P. A2A provides high-throughput, push-based, many-to-many messaging between distributed systems, microservices, and event-driven serverless applications

Amazon CloudWatch collects and visualizes real-time logs, metrics, and event data in automated dashboards to streamline your infrastructure and application maintenance.

Amazon EventBridge is a serverless event bus that lets you receive, filter, transform, route, and deliver events.

Disclosures

The information contained in this communication is provided for general informational purposes only, and should not be construed as investment or tax advice. Nothing in this communication should be construed as a solicitation or offer, or recommendation, to buy or sell any security. Any links provided to other server sites are offered as a matter of convenience and are not intended to imply that Wealthfront Advisers or its affiliates endorses, sponsors, promotes and/or is affiliated with the owners of or participants in those sites, or endorses any information contained on those sites, unless expressly stated otherwise.

All investing involves risk, including the possible loss of money you invest, and past performance does not guarantee future performance. Please see our Full Disclosure for important details.

Wealthfront offers a free software-based financial advice engine that delivers automated financial planning tools to help users achieve better outcomes. Investment management and advisory services are provided by Wealthfront Advisers LLC, an SEC registered investment adviser, and brokerage related products are provided by Wealthfront Brokerage LLC, a member of FINRA/SIPC.

Wealthfront, Wealthfront Advisers and Wealthfront Brokerage are wholly owned subsidiaries of Wealthfront Corporation.

© 2022 Wealthfront Corporation. All rights reserved.